What’s New in the Lambda V2 Runtime

Table of Contents

In August 2025, the Swift Server Work Group announced the V2 beta release of the Swift implementation of the AWS Lambda Runtime. Thanks to the efforts of Sébastien Stormacq, Adam Fowler, Fabian Fett, Aryan, and other members from the community, we now have a new version of the Lambda Runtime that is more powerful, bringing existing AWS Lambda features available from Swift.

Although the API still can change while in Beta, this first release gives us a good idea of what to expect from the final version. The package is out of beta since the release of version 2.0.0.

We’ve covered AWS Lambda in a few different posts before, so this post will focus on the new features and changes. Besides a major rewrite from the internals of the runtime, not relying anymore on NIO promises and futures, but structured concurrency, this new version brings three new features, which we’ll cover in different sections in this post:

Migration from V1

Before jumping into the new features, a short note about migrating from V1. Previously, the runtime owned the main function, and you had to implement only the handle method.

Now, the runtime gives the control back to the developer, allowing to use the @main entrypoint and initialize services in the main function. Alternatively, there’s a new block-based API, which allows using a main.swift instead file for shorter functions more easily.

// main.swift

let runtime = LambdaRuntime { (event: S3Event, context: LambdaContext) async throws -> Output in

let result = try await someAsyncOperation()

return Output(data: result)

}

try await runtime.run()In the example above, Input and Output can be any type from the AWSLambdaEvents module, and in some cases, such as S3 or SQS events, there’s no need to return an output at all.

Sample Project

For this post, we created a sample project that showcases two of the new features: background execution and streaming responses.

Note: Although the project uses the protocol-based approach, you can apply the same logic using the block-based API.

Background Execution

Up until now, when running a Lambda function in Swift, the API was designed in a way that after the response is sent to the client, the function execution is considered complete, and Lambda freezes the execution environment. Although this is fine in many cases, it can be limiting in some scenarios.

For example, imagine you want to report analytics, or you want to run a background job. To achieve this, you would either need to delay the response, performing these operations before sending the response, or using a service such as SQS (Simple Queue Service) to schedule jobs (executed by another Lambda function):

func handle(

_ request: APIGatewayV2Request,

context: LambdaContext

) async throws -> APIGatewayV2Response {

// perform other operations

// that delay the response

return APIGatewayV2Response(

statusCode: .ok,

body: #"{"message": "Great success!" }"#

)

}API Differences

With the new support for background execution, your function can respond to the caller first, and continue performing other operations, minimizing response time.

To enable background execution, your function needs to conform to the LambdaWithBackgroundProcessingHandler protocol. When using this protocol, instead of the previous, regular LambdaHandler protocol, that required the handle function to return a value (Output), the new one passes a response writer (LambdaResponseWriter<Output>) as a parameter:

// LambdaHandler:

func handle(

_ request: Input,

context: LambdaContext

) async throws -> Output

// LambdaWithBackgroundProcessingHandler:

func handle(

_ event: Event,

outputWriter: some LambdaResponseWriter<Output>,

context: LambdaContext

) async throwsThat change means that instead of returning a value at the end of the function, you can first send your response by calling outputWriter.write(response) and then continue performing other operations. This pattern reminds the res.status(200).send({...}) pattern from Express.js.

Implementing a Lambda Handler with Background Execution

This is how an implementation of the LambdaWithBackgroundProcessingHandler protocol could look like:

// 1

import AWSLambdaEvents

import AWSLambdaRuntime

// 2

@main

struct BackgroundExecutionLambda: LambdaWithBackgroundProcessingHandler {

// 3

typealias Input = AWSLambdaEvents.FunctionURLRequest

typealias Output = AWSLambdaEvents.FunctionURLResponse

// 4

static func main() async throws {

let adapter = LambdaCodableAdapter(handler: BackgroundExecutionLambda())

let runtime = LambdaRuntime(handler: adapter)

try await runtime.run()

}

}The code above does the following:

- Import both the runtime itself, and the AWSLambdaEvents module, containing the

Codabletypes for Lambda triggers and responses - Define the struct that conforms to the protocol, and mark it with the

@mainattribute to indicate it’s the executable entrypoint - Define the

InputandOutputtypes, in this case, a function URL request and response (identical to the API Gateway V2 request and response types) - Implement the

mainfunction, by initializing and running theLambdaRuntimewith aLambdaCodableAdapter, that wraps the struct itself (BackgroundExecutionLambda).

Trying to compile the code above will cause an error, as the required method by the protocol, handle, is not implemented. Let’s implement it now:

func handle(

_ event: Input,

outputWriter: some LambdaResponseWriter<Output>,

context: LambdaContext

) async throws {

// 1

let requestPayload: Request

do {

requestPayload = try event.decodeBody(Request.self)

} catch {

// 2

try await outputWriter.write(Output(

statusCode: .internalServerError,

body: "Couldn't decode request body ('(event.body ?? "No body")'): (error)"

))

return

}

// 3

let result = "Sending message: "(requestPayload.text)""

let response = Output.encoding(Response(result: result))

try await outputWriter.write(response)

// 4

doSomethingInTheBackground()

}This code is longer, but it’s not too complicated if you break it down:

- Try to decode the request body into the

Requesttype (more on that in a moment) - If decoding fails, write a response with a 500 status code, and a message indicating the error. Don’t forget to return early to stop the function execution.

- Now that decoding was successful, use the

LambdaResponseWriterto send the response - Finally, perform other operations in the background.

In case you’re wondering what the Request and Response types are, they’re simple structs with a single String property, but they can be any Decodable and Encodable type (respectively), to match your data:

extension BackgroundExecutionLambda {

struct Request: Codable {

let text: String

}

struct Response: Codable {

let result: String

}

}Testing the Background Execution

Are you curious to see this in action? Great, because we created a video to show you how it works in action! In our sample project, we send a message to a Telegram bot after sending the response to the client:

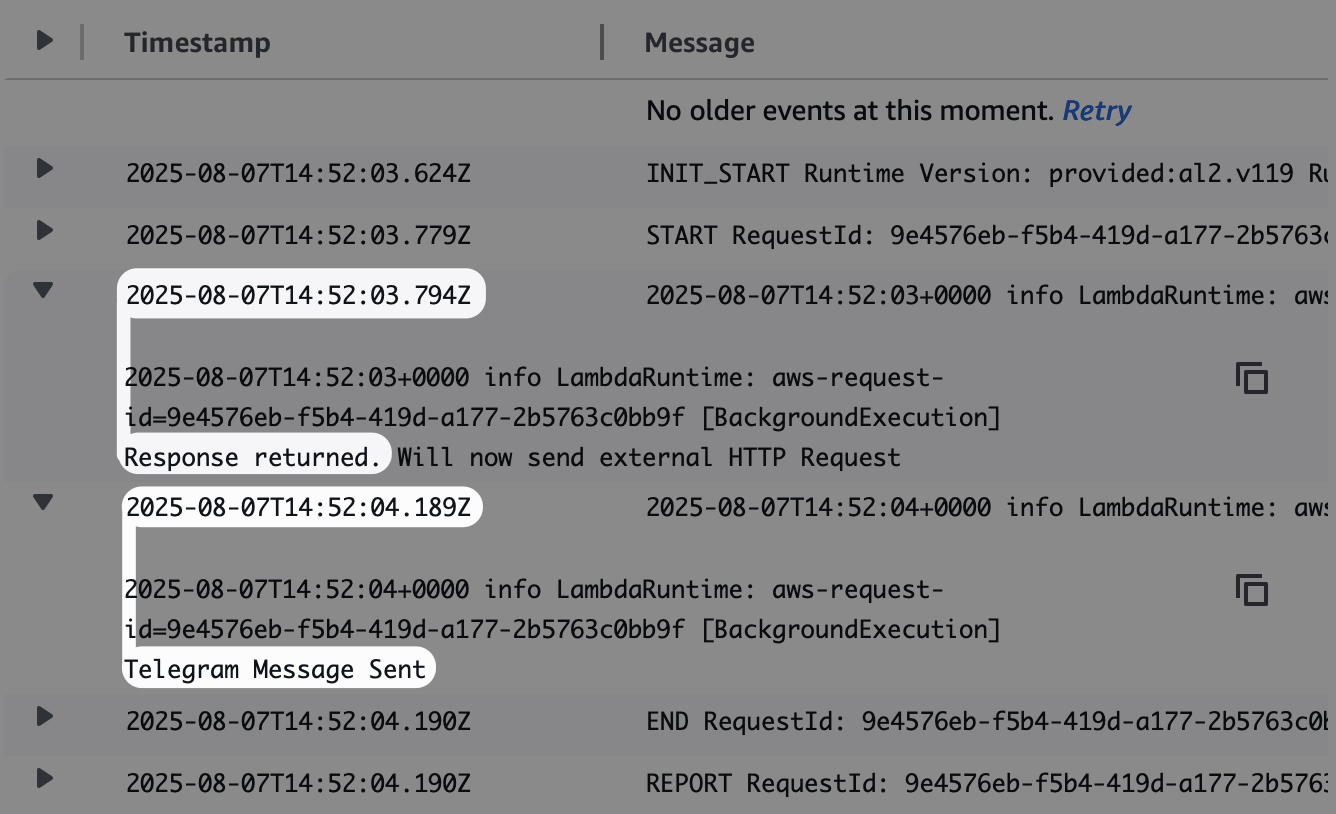

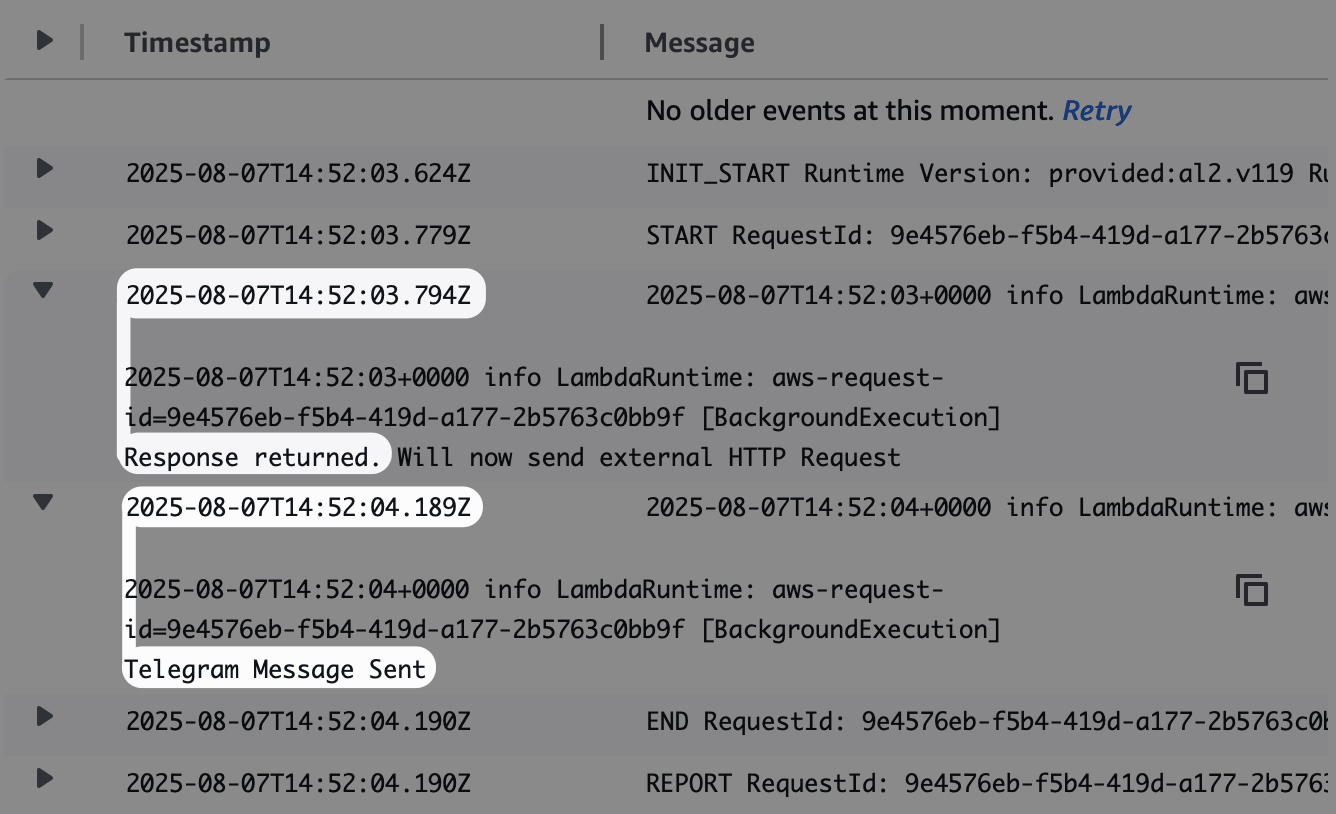

And when adding logs via context.logger.info("..."), you can see that the timestamps prove how the flow worked:

Streaming Responses

The second new feature is the support for streaming responses. In regular HTTP calls, the response is sent all at once. With streaming, the data is sent by the server in chunks, allowing the client to start processing the response before it has finished. This technique is useful when you want to reduce the time to first byte (TTFB), giving to the user a more responsive and dynamic experience.

Important: A downside of streaming with Lambdas, is that if you stream long responses, the execution environment might live longer than in buffer requests. This can both reach the function timeout (depending on your configuration), and also increase the costs. Consider these two points when deciding if streaming is the right choice for your use case.

One example of streaming responses that has been very popular recently is LLM-powered chats. While the response is being generated, the client can start displaying the text, and this is exactly the use case we’ll demo here.

Implementing a Streaming Lambda Handler

In another executable target, we create a new main struct, and conform it to the StreamingLambdaHandler protocol. The handle method is similar to the previous example, with some changes:

mutating func handle(

_ event: ByteBuffer,

responseWriter: some LambdaResponseStreamWriter,

context: LambdaContext

) async throwsThere are two main differences here: first, LambdaResponseStreamWriter can receive the write method multiple times. Second, the event parameter is now a ByteBuffer, which contains the event data.

This second example is also expecting a FuctionURLRequest as the Lambda trigger. Inside the payload, we expect a prompt field, as a string. To decode the event (incoming as ByteBuffer), start by using a LambdaJSONEventDecoder to transform it into a FunctionURLRequest type, and then decode the body of the request into the Request type (containing the prompt):

let eventDecoder = LambdaJSONEventDecoder(JSONDecoder())

let functionURLRequest = try eventDecoder.decode(AWSLambdaEvents.FunctionURLRequest.self, from: event)

let request = try functionURLRequest.decodeBody(Request.self)Now that the prompt is available, use it to call the OpenAI API, and initiate the streaming response:

// 1

let client = OpenAIClient(apiKey: "your-api-key")

let response: AsyncThrowingStream<String, any Error>

do {

// 2

response = try await client.prompt(event.prompt, context: context)

try await responseWriter.writeStatusAndHeaders(.init(

statusCode: 200,

headers: ["Content-Type": "text/plain"]

))

} catch {

// 3

try await responseWriter.writeStatusAndHeaders(.init(statusCode: 500))

try await responseWriter.writeAndFinish(ByteBuffer(string: "Something went wrong: (error)"))

return

}Bit by bit:

- Initialize the OpenAI client with an API key (You can check the implementation of the client in the repositoy linked above), and declare the response stream it returns in the

promptmethod - Call the

promptmethod, and if no error is thrown by it, start the response with thewriteStatusAndHeadersmethod, passing a 200 status code and theContent-Typeheader - If an error was thrown, begin a 500 response, and use the

writeAndFinishmethod to send an error message.

Streaming the Response

Once the response is started, your handler can iterate over the async stream, and pass along the values to the response writer:

for try await delta in response {

try await responseWriter.write(ByteBuffer(string: delta))

}

try await responseWriter.finish()It’s important to call the finish method after the stream is complete, closing the response. Otherwise, the client might end until the function times out.

Note: When deploying a streaming Lambda to AWS, you need to set the function invoke mode to RESPONSE_STREAM instead of BUFFERED.

Calling the Streaming Lambda with curl

Of course we also prepared a video to show you how it works in real life!

Here we’re using curl to call the function, and passing the --no-buffer flag to avoid buffering the response in the client, and having streaming enabled instead.

Service Lifecycle Support

The Swift Service Lifecycle package was created by Apple, and is now maintained by Apple and the community. Its goal is to help managing the lifecycle of applications, by providing a unified mechanism for starting and stopping services, along with features for graceful shutdowns. It allows developers to organize long-running tasks and ensures resources are cleaned up properly before an application exits.

The runtime repository contains an example of how to use this package, to start a Lambda, but only after a PostgreSQL connection is established:

Explore Further

Being still in Beta, this is the time to test the new features and APIs and provide feedback to the Swift Server Work Group. Commenting on the announcement post in the Swift Forums, or opening an issue in the runtime repository are great ways to do it.

Check out the release notes of version 2.0.0 to learn more about the changes!

The sample project uses Swift Cloud to build and deploy the executable targets. Take a look at the previous posts about it and the Infra.swift file to learn more.

Thanks to Sébastien for the help reviewing the article and its sample code.

If you have any questions or suggestions, feel free to reach out on Mastodon or X.

See you at the next post. Have a good one!

The sample code for this post is available on GitHub

The sample code for this post is available on GitHub