How to Store Files with Vapor

In the last Dev Conversations episode with Mikaela Caron, she shared how hard it was for her to deal with file uploads in a Vapor App. In a book she was following, the sample code showed how to receive and store a file in the server’s filesystem. While this is a good starting point, it’s far from a good long-term solution for a production app: nowadays, servers run in containers and their filesystem is ephemeral, requiring those files to be stored externally. For this reason, managed object storage services have become very popular.

In this post, you’ll learn two different ways a client can upload a file with the help of a Swift Server App, using AWS S3 as the file storage service. You’ll also see how you can use other services compatible with S3 by making a one-line change.

In both approaches below, you’ll use the SotoS3 package to interact with the S3 API. While there’s the official AWS SDK for Swift, SotoS3 is a community-maintained package, and this is what the sample project in this post will use.

The Sample Project

The starter sample project, which you can use to follow along, is a minimal Vapor server that only responds to a GET request on the /hello path.

Make sure you checkout the starter branch. Throughout the post, you’ll use it to:

- Add the SotoS3 package to the project

- Learn how to create an

AWSClientand access it via dependency injection - Create a controller that handles two different file upload approaches

Adding SotoS3

Open the sample project in Xcode or in your editor of choice, by opening the Package.swift file. Then, add the Soto package dependency to the dependencies array:

dependencies: [

// Vapor and other dependencies...

.package(url: "https://github.com/soto-project/soto.git", from: "7.3.0"),

]Then, add the SotoS3 package to your App target:

targets: [

.target(

name: "App",

dependencies: [

// Vapor and other dependencies...

.product(name: "SotoS3", package: "soto"),

]

)

]With this, you can import SotoS3 in your app and interact with S3.

The AWS Client and Dependency Injection

Vapor provides a way to attach services to the Application and Request types, similar to how Vapor’s built-in services work, such as caching, logging, and databases. Additionally, it’s possible also to attach your own services, register them when the app starts, and access them in the request lifecycle.

To do so, create a new file belonging to the App target, calling it Application+AWSClient.swift. Then, add the following code:

import SotoCore

import Vapor

extension Application.Services {

var awsClient: Application.Service<AWSClient> {

.init(application: application)

}

}

extension Request.Services {

var awsClient: AWSClient {

request.application.services.awsClient.service

}

}This code creates two extensions: one on the Application type, and one on Request. While you haven’t created the client itself, you can see in the second extension, that the client you’ll register can be accessed via the services property on Application.

Now you can register the client in the configure method. Open the configure.swift file. First, import the SotoCore package on the top of the file:

import SotoCoreThen, add the following lines before the routes(app) call:

let awsClient = AWSClient()

app.services.awsClient.use { _ in awsClient }This code creates a new AWSClient instance and registers it as a service in the Application.

Note: Initializing an AWSClient requires providing credentials. When setting environment variables, either locally or when deploying, make sure to set the AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY variables. In this case, AWSClient will automatically find them, and there’s no need to use the keys as hardcoded strings. This is a good practice to avoid hardcoding secrets in your code.

Creating the Controller

Now that you can access the AWS client, it’s time to write the controller that will receive the requests. Open the FileUploadController.swift file inside the Controllers folder. There, you’ll find some structs that will represent the request and response bodies.

Start by creating the controller type:

import SotoS3

struct FileUploadController: RouteCollection {

let bucketName: String

init(bucketName: String) {

self.bucketName = bucketName

}

func boot(routes: RoutesBuilder) throws {}

}Notice a few things:

- As it’ll use the S3 client, import the SotoS3 package.

- The controller requires a

bucketNameparameter, and it will use this bucket when performing requests to S3. - The controller conforms to the

RouteCollectionprotocol, for making the routes registration easier - Therefore, it requires a

bootmethod. Right now, it’s empty, but you’ll fill it later, after the methods handling the requests are implemented.

Handling a File Upload

The first way your server can store files is by directly receiving them in the request body, and forwarding them to an S3 bucket. Vapor provides a type that represents a file upload, and can be decoded from the request body: the File type.

Implementing the Upload Method

Add the following method to the controller:

// 1

@Sendable func upload(req: Request) async throws -> FileUploadResponse {

// 2

let file = try req.content.decode(File.self)

let key = UUID().uuidString + (file.extension ?? "")

// 3

let putObjectRequest = S3.PutObjectRequest(

acl: .publicRead,

body: .init(buffer: file.data),

bucket: bucketName,

key: key

)

// 4

let s3 = S3(client: req.services.awsClient)

_ = try await s3.putObject(putObjectRequest)

// 5

let url = try url(for: key, region: s3.region.rawValue)

return FileUploadResponse(url: url)

}This looks like a ton of code, but let’s divide it into smaller parts to make it easier to understand:

- This is a method that receives a

Requestand asynchronously returns aFileUploadResponse- a type that contains the URL of the uploaded file. - Start by decoding the multipart form data from the request body, into a

Filetype. Although the file name is available in the request, use a UUID to generate a unique key for the file, and use the file extension to keep the file type. - Build the request which you’ll use to upload the file to S3. Here, you can set the permissions to public read (desired for this example), or just omit this parameter to use the bucket’s default settings. Pass the body with the file data, use the bucket name, and the key you generated in the previous step.

- Create an instance of the S3 client, using the client you registered in the

Application, and execute the async request. - Finally, compose the URL of the file (more on that soon), and return it in the response.

Note: This code uses ACLs for managing the file access. A bucket must have ACLs enabled to use this feature, under the Permissions tab in the S3 console, Object Ownership must be set to ACLs enabled.

If you copy this code, you’ll get an error saying that the url(for:region:) method is not implemented. No worries, this is shorter than the previous one. Here you’ll compose the URL of the file, using the bucket name, the region, and the key.

private func url(for key: String, region: String) throws -> URL {

let urlString = "https://(bucketName).s3.(region).amazonaws.com/(key)"

guard let url = URL(string: urlString) else {

throw Abort(.internalServerError)

}

return url

}Registering the Route

There are two things missing to test the file upload.

First, you need to actually use the method in a route. Inside the boot method, add the following lines:

let fileUploads = routes.grouped("files")

fileUploads.post("upload", use: upload)This means that the route will be available at the /files/upload path, as a PUT request. In a real app, remember to add authentication to this route.

Then, open the routes.swift file, and add the following line to the routes(app) method:

app.register(collection: FileUploadController(bucketName: "<your-bucket-name>"))This registers the controller in the app, and makes the upload method available at the /files/upload path.

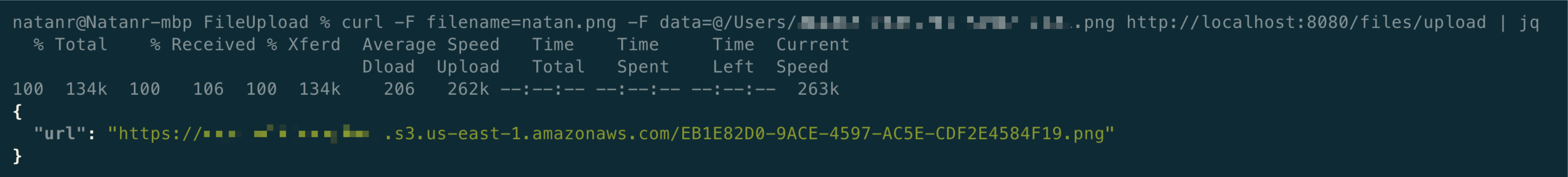

Testing the Upload Method

To test the method you just wrote, you will use curl. Remember the AWS client expects the AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY environment variables to be present, otherwise the requests will fail.

In your terminal, run the swift run App command, and once it’s listening in the 8080 port, run the following command in another terminal, replacing the path with a valid path pointing to an existing file:

curl -F filename=photo.png -F "data=@/path/to/your/file.png" http://localhost:8080/files/uploadOoops, you probably got an error! 413 is the status code for “Payload Too Large”, which means that the file is too large to be uploaded. Fear not, this is a good thing: it means that the file size limit is working. Thankfully, changing the file size limit is a one-liner.

Open the configure.swift file, and add the following line:

app.routes.defaultMaxBodySize = "1mb"You can change the value to whatever makes sense for your use case.

Now, run the curl command again, and after a few seconds, if your AWS credentials are set up correctly, you should see a JSON response with the URL of the uploaded file:

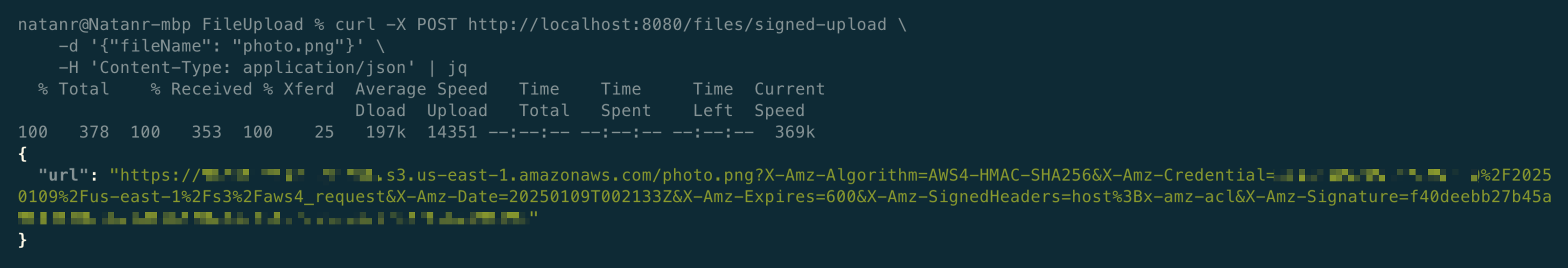

Using Signed URLs

Do you know what’s better than having your server receiving files? Being able not to receive them at all! Signed URLs are a way to give a client access to a temporary specific S3 request - in this case, to upload a file, but they can be used for other operations as well.

This means the upload can happen directly from the client to S3, without the need of your server receiving the file.

To implement this, open again the FileUploadController file, and add a new method that creates a signed URL:

// 1

@Sendable func createSignedURL(req: Request) async throws -> SignedUploadResponse {

// 2

let request = try req.content.decode(SignedUploadRequest.self)

// 3

let s3 = S3(client: req.services.awsClient)

let url = try url(for: request.fileName, region: s3.region.rawValue)

// 4

let signedURL = try await s3.signURL(

url: url,

httpMethod: .PUT,

headers: ["x-amz-acl": "public-read"],

expires: .minutes(10)

)

return .init(url: signedURL)

}This is also a bit long, but here’s what each part does:

- This is, once again, a method that receives a

Requestand asynchronously returns the URL of the signed request in theSignedUploadResponsestruct. - The

SignedUploadRequeststruct is the expected request body, and it contains the file name. Here, just for the example, you’re using it directly instead of using aUUID. - Create an instance of the S3 client, using the client you registered in the

Application, and compose the URL of the file, similar to what you did in theuploadmethod. - Finally, create a signed URL for the request, passing the URL to sign, the HTTP method, the header for allowing public read access, and last but not least, when the URL will expire.

To be able to call this method, add it along with the upload method in the boot method:

fileUploads.post("signed-upload", use: createSignedURL)It’s important to note again: in your real-world app, you should have these URLs behind authenticated routes, to avoid exposing access to operations that should be restricted.

Now, call it using the another curl command:

curl -X POST http://localhost:8080/files/signed-upload -d '{"fileName": "photo.png"}' -H 'Content-Type: application/json'

With this URL, that expires after 10 minutes, the client can upload the file to S3 without the need of your server receiving it - and then it can update the server later, letting it know the file was uploaded.

It’s also worth pointing out that signed URLs are not limited to file uploads. You can use them for other operations as well, such as downloads.

Using Other S3-Compatible Services

You might be wondering: “what if I want to use a different file storage service?“. That’s a good question, as they might be cheaper than AWS S3, or they might not charge for network traffic of the files.

Many services are compatible with the S3 API, so the answer is: you can! To list a few of them:

The SotoS3 package is compatible with any service that implements the S3 API, and you can use the same code you wrote in this post, making just one adjustment: the endpoint URL. To use a different service, set the endpoint property of the S3 type to the endpoint URL of the service you want to use:

let s3 = S3(

client: req.services.awsClient,

endpoint: "https://s3.<region>.backblazeb2.com"

)There are 3 things to keep in mind when using a different service:

- Each service will have its own format for the URL, so make sure to check the documentation of the service you want to use. With Cloudflare R2, for example, the endpoint format is

https://<account-id>.r2.cloudflarestorage.com. - Don’t forget to adjust the

url(for:region:)method to the format of the service you want to use. - Although the credentials will have different names and not be from AWS, they should still have the same keys,

AWS_ACCESS_KEY_IDandAWS_SECRET_ACCESS_KEY, to work seamlessly with Soto.

Explore Further

That was a lot of information - congratulations on making it this far!

Here are some notes and links relevant to what was covered in this post:

- To learn more about body size limits in Vapor, check the docs about body streaming. While you can have a default limit, you can also set a custom limit for each route.

- Having the correct permissions is key to avoid security issues, so make sure to check how to disable public access, or enable ACLs for a more granular control.

- Upon server shutdown, you should gracefully shutdown the AWS Client. Although this is not covered in this tutorial, you can check out how to do it in this commit, by using Vapor’s

LifecycleHandler. - Authentication & Authorization: as mentioned twice in this post, in your server app you should have routes that deal with file uploads (and also downloads!) behind authenticated routes.

If you have any questions, or want to share your thoughts about this article, shoot us a comment at X or Mastodon.

See you at the next post. Have a good one!

Download the starter project to follow along

Download the starter project to follow along